There are, of course, other reasons why captions are quickly becoming an essential component of media production, and it’s not just because of the memes. While some translation services can work directly from the original media, offering a caption file in the original language can help to speed the process up.

(Certainly a lot less involved than dubbing and ADR.) Global reachĪnd for those of us working with global markets, it’s long been known that captions are the easiest way to repurpose your film and video content for audiences who speak a different language. It’s estimated that as much as 85 percent of video views are taking place with the sound turned off. So if you want to improve the signal-to-noise ratio of your social media content, captions are now an essential part of the process. Muted autoplay is quickly becoming the norm for video in scrolling social feeds, and it’s estimated that as much as 85 percent of video views are taking place with the sound turned off. While meeting accessibility requirements is an excellent justification for captioning, it’s also beneficial to audiences who don’t suffer from hearing loss, especially when it comes to video in social media. So these days it’s probably safer to assume that captions are required by law in the country/state where you operate than to find out the hard way.

#Captions adobe premiere pro 2021 tv#

In the US, the FCC has already made it a legal requirement that all TV content broadcast in America must be closed captioned, and any subsequent streaming of this content falls under the same rules.Īnd while it’s true that content that is uniquely broadcast over the Internet falls outside of these regulations, legislation including the Americans with Disabilities Act (ADA) has already been successfully used as the basis for lawsuits against streaming platforms like Netflix and Hulu. It’s worth noting that section 7.3 of the current WCAG indicates that media without captions is deemed a critical error that automatically fails the rating process. The laws around accessibility are different across the world, but the closest we have to a global standard are the Web Content Accessibility Guidelines (WCAG) published by the World Wide Web Consortium (W3C). The motivation for automatically generating captions is likely to depend on your business perspective.įor example, companies like Google and Facebook want it because it makes video indexable and searchable, allowing us to find content inside videos (and for them to sell ad slots based on the context).īut for video producers and distributors, the need for captions is probably coming from a different place. So it wasn’t really surprising that Adobe pulled Speech Analysis from public release in 2014, and stayed silent on the matter until the fabulous Jason Levine brought it back into the spotlight in 2020. As one commenter put it “in my experience it does such a bad job that the time it’d take me to correct it is considerably more than the time it’d take me to transcribe it myself.”Īnd that, in a nutshell, was the problem.

Google’s auto-transcription for YouTube videos was just as unreliable.

#Captions adobe premiere pro 2021 software#

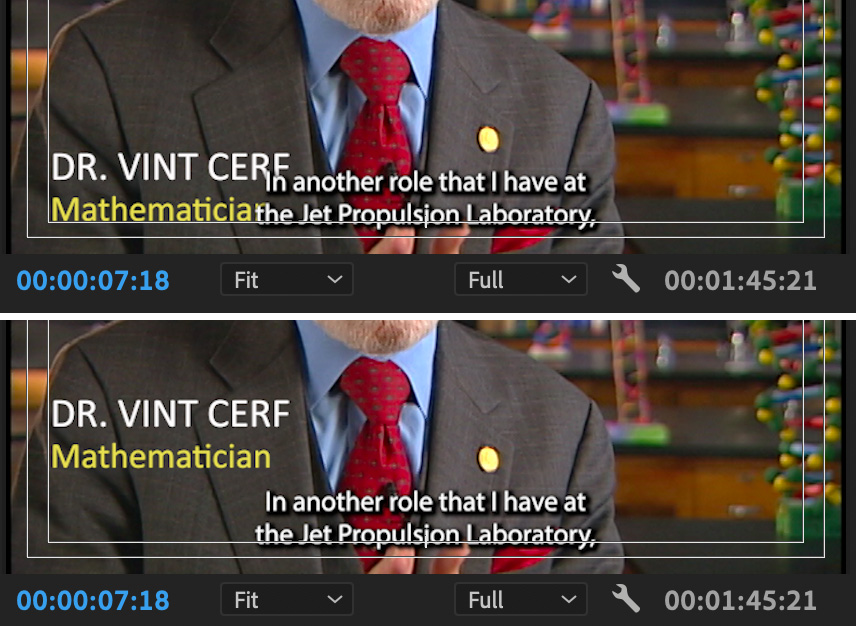

Premiere Pro Speech-to-Text is not Adobe’s first attempt.īut to be fair, the same was also true of other software at that time. When I tested it back then, the best description for the results it produced would be word salad.

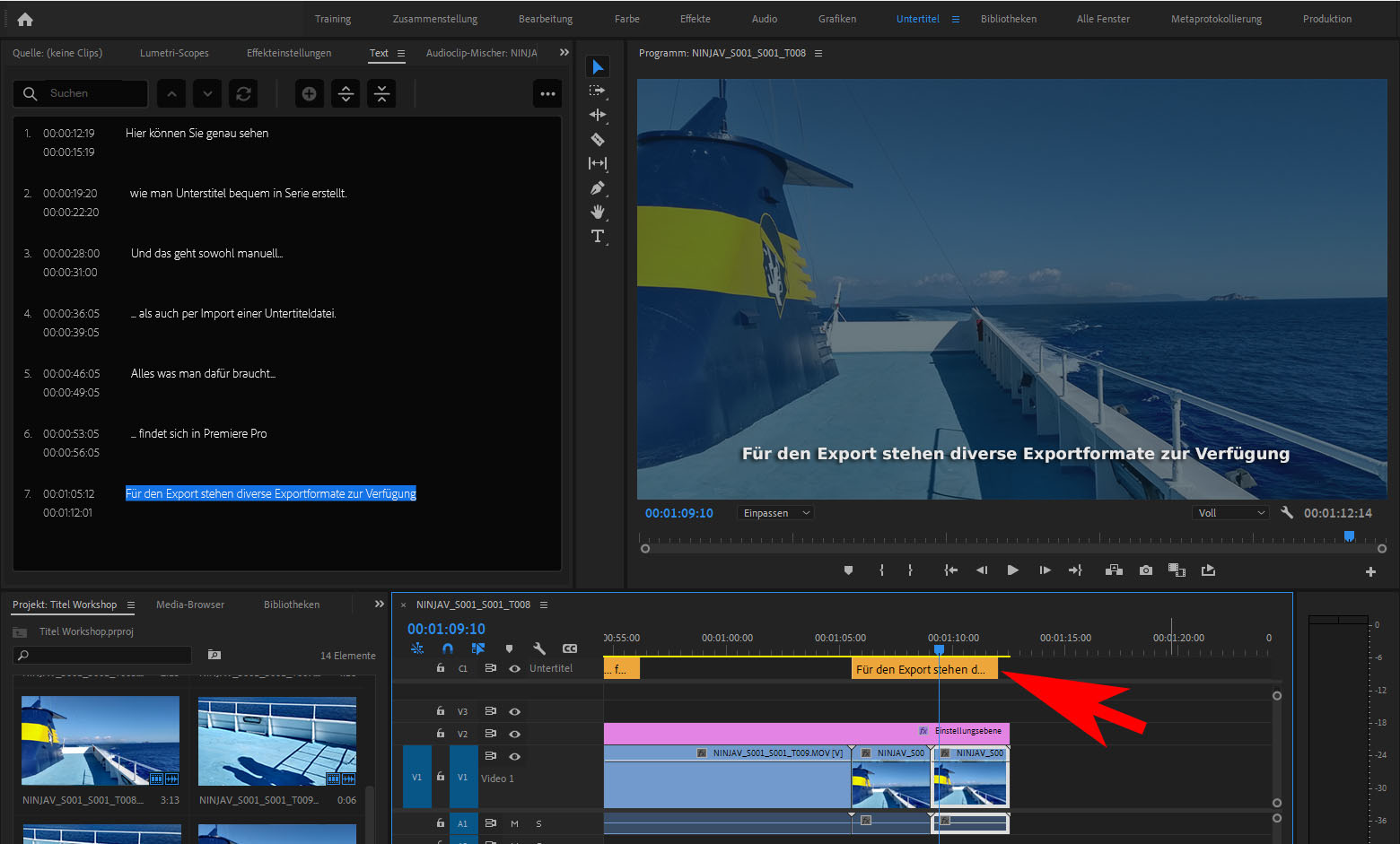

Speech Analysis was added to Premiere Pro back in 2013. Let’s take a moment to recall that this is not Adobe’s first attempt at releasing a tool for converting recorded audio into editable text. Let’s take a look at why you might want it, how you might use it, and whether or not this machine learning tool can augment your productivity. For that, you should be looking at the least talked-about AI feature in Premiere Pro-Speech-to-Text. But while turning the clock forward on your face is fun, and swapping that blown-out skyline with a stock sunset makes a landscape prettier, it’s hard to see much commercial value in these tools. Mobile and social were (and always will be) focal points, but Artificial Intelligence (AI) and Machine Learning (ML)-or Sensei as Adobe has chosen to brand them-took the stage in a number of surprising ways.Īs always, a lot of the airtime was given over to Photoshop, which added a bundle of Sensei-driven tools called Neural Filters that include image upscaling, sky replacement, and portrait aging. If you watched the launch videos at Adobe MAX 2020 you probably noticed a few trends forming during the product demos.

0 kommentar(er)

0 kommentar(er)